What is the web?

Before attempting to code, it is crucial to comprehend the workings, structure, and logic behind the internet and the web.

Before attempting to code, it is crucial to comprehend the workings, structure, and logic behind the internet and the web.

The term Internet describes INTERconnected NETworks, which form a worldwide infrastructure connecting computer networks.

The Internet emerged in the 1960s as a military and research project in the United States. The initial network, known as ARPANET, was established through partnerships between the US Department of Defense and various universities such as MIT, UCLA, and Stanford. The term “Internet” was coined later on to describe this network.

Until the 1990s, the Internet was mainly utilised by researchers and academics to exchange information and communicate with each other. The Internet was not a commercial platform and was not accessible to the general public.

This led to many experiments and innovations, leading to the creation of numerous technologies used today, as well as a period of reflection on how to shape the Internet.

Two main figures emerged from the research community and had a significant impact on the development of the Internet later on.

Ted Nelson is recognised for his investigations into organising and structuring information, and referencing it. In 1965, he introduced the term hypertext and later coined hypermedia. Although mostly theoretical and experimental, his work lacked practical applications; his vision was perhaps too complicated to implement, especially the notion of transclusion.

Still, his work had a substantial impact as a pioneer on the development of the Internet. Moreover, his research on hypertexuality and hypermedia resulted in the inception of the World Wide Web.

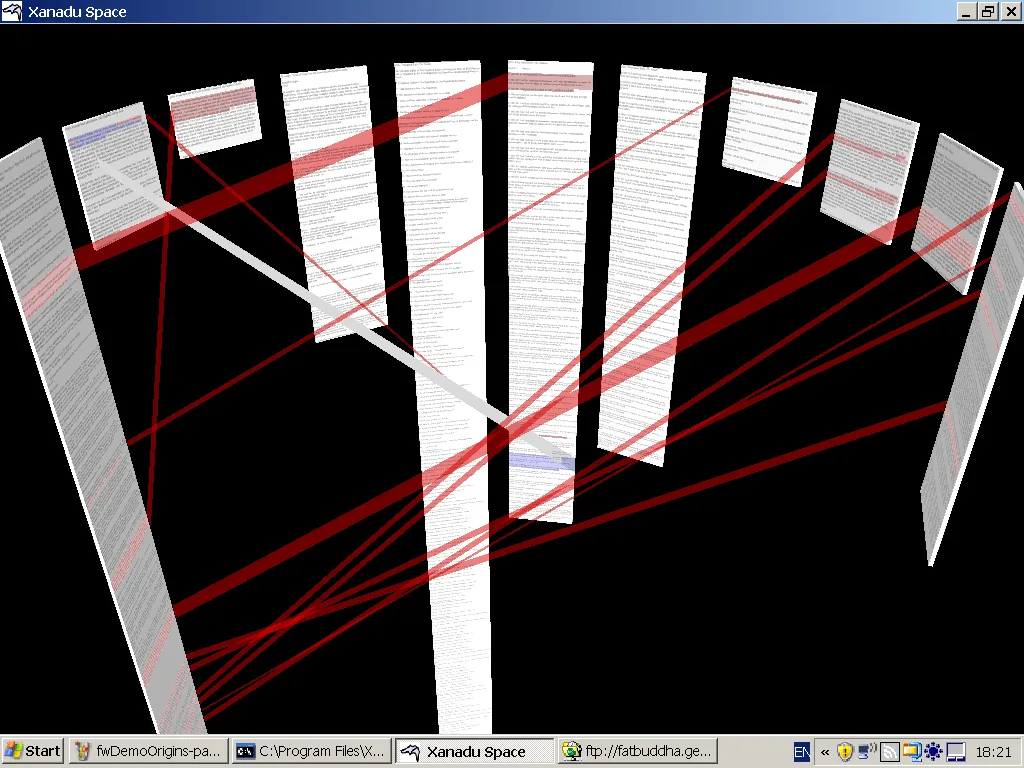

Project Xanadu, developed by Ted Nelson in the 1960s, is a theoretical hypertext system with the goal of creating a global network of hyperlinked documents.

Douglas Engelbart is a leading figure in the field of human-computer interaction (HCI). He is renowned for his invention of the computer mouse, as well as for creating the graphical user interface (GUI). Engelbart also made significant contributions to the research on hypertext and hypermedia, building on the work pioneered by Ted Nelson.

The Mother of All Demos, presented by Douglas Engelbart in 1968, is a demonstration of the NLS (oNLine System), a hypertext system developed by Engelbart and his team at the Stanford Research Institute. The demo presents various technologies that are now part of our daily lives. These include the computer mouse, video conferencing, and collaborative editing.

Let me introduce the word ‘hypertext’ to mean a body of written or pictorial material interconnected in such a complex way that it could not conveniently be presented or represented on paper

A File Structure for the Complex, the Changing, and the Indeterminate, Ted Nelson, 1965

The significance of hypertext lies in its ability to produce and traverse non-linear structures. This represents a crucial modification in our approach toward information, marking a transition from linearity to non-linearity, sequentiality to parallelism, and staticity to dynamism.

The hypertext lies at the heart of what we know as the World Wide Web, or the web. This technology was developed by Tim Berners-Lee at CERN in Switzerland from 1989-1990 and has since become the primary application of the internet. The web is a system of interconnected hypertext documents that are accessible via the internet.

The Internet is the global network of networks, the infrastructure. The web, on the other hand, is a specific application that is one of the subsets of the Internet.

Other applications of the Internet include email (SMTP), file transfer (FTP), instant messaging (IRC), peer-to-peer file sharing (BitTorrent), among several others.

The term Web or World Wide Web (WWW) stems from the metaphor of a spider’s web - a network of connected threads. The web links documents with hyperlinks and hosts them on servers, forming interconnected dots.

On one side, you have the server, which hosts the documents and makes them available. The documents are stored on the server and can be accessed through the URL (Uniform Resource Locator), which is the specific address of each document.

On the other side, you have the client, which is the software that requests the documents from the server, reads them, decodes them and usually displays them to the user.

Between the server and the client, communication occurs via the HTTP protocol (HyperText Transfer Protocol). The client sends a request to the server, which then sends back a response.

On the client side, the user employs a browser to request documents from the server. The browser is a software that can read and decode these documents before displaying them to the user.

While browsers cannot decode everything, they are capable of reading specific file formats, including images, videos, sounds, and most importantly documents written in one specific language: HTML (Hypertext Markup Language).

The primary specific language of the web is HTML. It is a markup language that encodes text with formatting and structural information. The information is blended with its formatting instructions.

HTML is the web’s standard language, originally designed specifically for it. Later, other languages were created to expand and reinforce HTML’s functions. The first one is CSS (Cascading Style Sheets), used to alter the aesthetic output. CSS allows separation between information and presentation. The second one is JavaScript, a programming language, enabling the creation of scripts. It is the only programming language used on the client-side of the web!

Understanding the connection between client-side and server-side is crucial to comprehend the workings of the web. Here are the fundamental stages of this communication:

On the client side, the user uses a browser to request documents from the server. The browser is a software that can read and interpret these documents before displaying them to the user. A plethora of browsers exist or have existed, with Netscape Navigator and Internet Explorer being the most favoured in the 1990s, until being succeeded by Mozilla Firefox, Google Chrome and Safari in the 2000s.

The main function of web browsers is to convert server responses into formats that can be viewed by users. Typically, if the response is in HTML, the browser decodes it and displays it, then proceeds to decode linked resources such as CSS, JS files, fonts, images, videos, and sounds. Additionally, the browser can execute JavaScript scripts that modify the HTML document after decoding.

To load a webpage, the browser typically needs to make multiple requests to the server. This is because the webpage constitutes a collection of documents rather than a single entity. The first request, initiated by the user’s typing a URL into the address bar, usually results in the retrieval of the main HTML document. However, this document will then contain links to other documents, such as CSS files, JavaScript files, images, videos, sounds, and other HTML documents. The browser will need to make additional requests to the server to access these documents.

The size of a webpage is the sum of all the documents needed to display it, including not only the HTML file but also all linked resources.

While HTML/CSS are still at the core of the web now, these 35-year-old structures may not keep up with the rapid evolution of technology, so there has been a move towards more complex approaches.

As a comparison, let’s consider two personal computers sold by Apple. On one side, the Power Macintosh 8100, released in March 1994, which was at the time the most advanced personal computer ever released by Apple. On the other side, we have its modern equivalent, the Mac Pro, which was most recently updated in June 2023.

| Power Macintosh 8100 | Mac Pro | Multiplier | |||

|---|---|---|---|---|---|

| Released year | |||||

| 1995 | 2023 | / | |||

| Processor speed (in MHz) | |||||

| 80 | 3680 | x46 | |||

| Standard RAM (in MB) | |||||

| 8 | 6,400 | x800 | |||

| Standard Storage (in MB) | |||||

| 250 | 1,000,000 | x4000 | |||

| Price (in adjusted US$) | |||||

| 8,500 | 6,999 | x0.82 | |||

Regarding internet speed, let’s compare download speed. In 1995, people predominantly used 56K modems to access the internet, which equated to a download speed of approximately 56Kbps (kilobits per second). Presently, a reliable fibre connection can enable a download speed of around 500Mbps (but typically around 100Mbps = 100,000Kbps). This translates to a speed increase of over 1500 times.

These figures, even if they don’t mean anything out of context, simply allow you to see the absolutely astonishing evolution of hardware and software technologies in 30 years.

But while the technology has improved incredibly quickly, the structures behind the web haven’t changed that much over the same period. HTML and CSS have of course had several versions (now HTML5 and CSS3), and JS is updated with new features every year, but the inner structure hasn’t changed. We’re still based on the idea of HTML pages connected by hyperlinks.

To address this issue, many websites now use frameworks that provide an extra layer on top of the HTTP standards. However, this contradicts the original ideology and ideals of the creators of the World Wide Web. React and Vue for JavaScript and Bootstrap and Tailwind for CSS are prominent examples.

The primary needs addressed by these frameworks are the need for greater standardisation between browsers and increased code responsiveness.

Social media sites are a good example: React was created by Facebook in the early 2010s to help develop more reactive web applications with constant requests and responses that require better integration between server and client.

The problem with these frameworks is that they render almost unreadable HTML and rely entirely on JS to implement content.

With the architecture we have described, it is clear that the creation of web pages initially relied on HTML coding in a technological environment that was not user-friendly or easy to understand. In contrast, today, it relies on a wide range of advanced technologies that can be complex to understand for non-coders.

Programmers have developed solutions for non-coders to publish content online, using tools that allow web pages to be created without programming knowledge. As explained earlier, HTML combines content and structure as a markup language. Web 2.0 emphasises the separation of content and structure, facilitated by Content Management Systems (CMS) that allow non-coders to modify website content through an interface.

Later, templates replaced CSS and JS for broader availability and easier customisation. Users could choose from sets of fonts, colours, sizes and slideshow layouts, for example, through an easy-to-use interface.

The best-known example of this evolution is WordPress, which was initially designed for publishing blogs before evolving into a CMS. It was released in the early 2000s and still powers almost half the world’s websites.

Behind this paradigm shift is the creation of new server-side languages (as opposed to client-side languages like HTML), the most important of which (used by WordPress, for example) is PHP. The client can decode a client-side language, whereas a server-side language can only be decoded by the server.

These new languages change entirely the way the client and server communicate.

Here are the fundamental stages of this new way of communicating between client and server:

In this manner, the content can be managed autonomously from the HTML, as it is organised and preserved in a devoted database. The general structure of the pages is pre-coded and is never reachable by the user, rendering it totally invisible. The separation between design/development and content management, between the construction and the running of the website is absolute, and we observe a division of labour between programmers and non-programmers.

While it became effortless to create websites, at some point, it even became useless to have one. With WordPress, creating a website and choosing a template to publish content online is still necessary. However, with the creation of social media, it is not even required to develop a website to publish content but to create an account on a platform that then acts as a kind of aggregator, allowing the user to reach a more personalised audience that does not even have to go to several websites that are not continuously updated to access information.

These developments have led to the near disappearance of the blog format (the era of blogging peaked in the late 2000s) and, even more so, the personal website. Websites are now mostly professional, commercial or artistic tools in parallel with social media, which would act as the personal side of the internet.

As technology became more powerful and efficient, there was a tendency towards what Donald Norman termed the Invisible Computer. This refers to a technology that could potentially alienate its users as they lose control over it.

As some users became more and more aware of their alienation from a web (and even a technology) that was getting out of their hands because it was over-engineered and structurally over-complicated, a nostalgia for the early days of the internet and the associated 1990s aesthetic became very strong among thinkers, designers and even developers: a phenomenon we now call netstalgia.

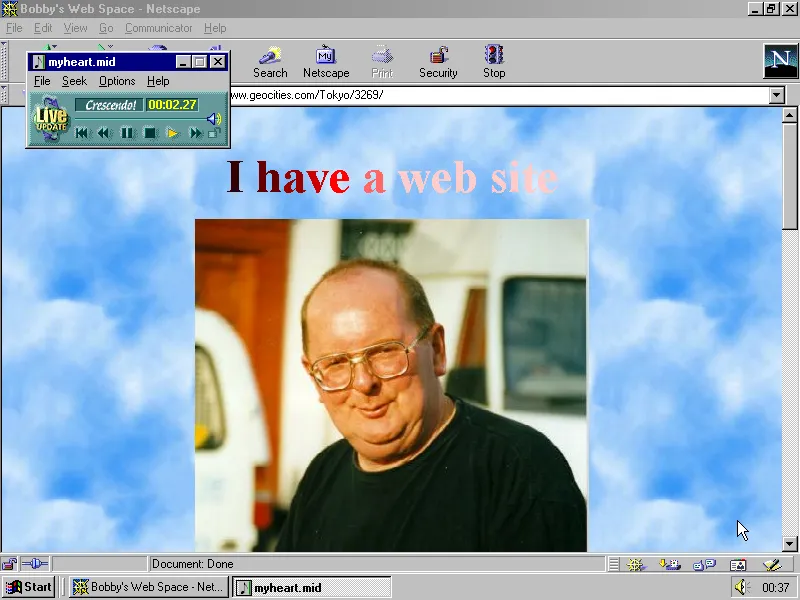

This netstalgia, as the philosopher and artist Olia Lialina would point out, is probably based on a distorted vision of the early web, seen as a time when everyone had their website and knew how to code it in HTML. The reality is much more nuanced: firstly, the first web users in the 1990s were often people who were interested in technology and not just anyone, and even if they did have their websites, they were pretty anecdotal and often without an audience. And, more importantly, the first attempts at social media came relatively early in the medium’s history; think of MySpace or Friendster in 2003.

Still, this period has had and continues to have a significant influence among net artists and designers. Artists were among the net’s earliest users in 1995 and began creating webpages that functioned as artworks at an early stage (notably, Heath Bunting and Vuk Cosic). Later, Olia Lialina would become a significant figure as a theorist, historian, and philosopher, with extensive research on these subjects and the emergence of early-web aesthetics. Her contributions to research around the Geocities platform, for example, have led to a very important body of work like the project One Terabyte Of Kilobyte Age.

One Terabyte Of Kilobyte Age (2010 — ongoing) is a project by Olia Lialina and Dragan Espenschied that archives the contents of the Geocities platform. The project is a collection of screenshots of the webpages, which are organized by themes and topics. You can learn more about the project here.

Today, we find a significant part of this aesthetic influence through the websites of the brutalist movement, even if the two do not necessarily have the same philosophy at their roots. The early-web websites were constrained by the technology of the time and were highly creative in the way they ‘hacked’ the internal structures of the web. The brutalist movement is more of a reaction to the over-engineering of the web and the user alienation that comes with it and an aesthetic that comes with a very conscious ideology. We will talk more about the brutalist movement later this block.